Polling is becoming more of an art than a science

We asked pollsters what went wrong — and right — in 2024, and what’s in store for 2026.

We’re 656 days out from the 2026 midterms. Is it way too early for us to write anything about them? Yes. But there’s one group who’ll have to start thinking about 2026 even before we do: pollsters.

If you’re one of our election-minded subscribers, you already have a good idea of what topline polling error looked like last year. Short answer: not great, but we’ve seen worse. So are we going to see any major changes to how polls are conducted in the future? We talked to a bunch of pollsters to find out.

How did the polls do?

For the Official Silver Bulletin Take, you’ll have to wait until we update our pollster ratings. But in terms of how pollsters have reacted, Nate Cohn, who runs the New York Times/Siena College poll, thinks “on balance, people will probably feel okay about it.”

Why the cautious optimism? Well, first, polling error this cycle was slightly below average. We know: often, polls are evaluated by whether they “call” the correct winner, and with such a close presidential race in many states, some polls incorrectly had Kamala Harris winning states she lost — or winning the national popular vote, which she lost, too. Still, the polls missed by about 2.5 points nationally and 2 points in the average swing state.

Natalie Jackson — pollster and Vice President at GQR Insights — thinks pollsters are hesitant to get too excited about this result but sees their mood as optimistic relative to the last few cycles — where large errors dominated the post-election conversation about the polls:

"The good thing is it was better than 2020 and 2016. The not-so-good thing is that we still underestimated Trump, which is concerning. It’s concerning when the bias runs in the same direction three cycles in a row. So… it's really nice that we don’t have a bajillion articles saying death to the polling industry, but I don’t think that means we’re home free either.”

The polls also did well by another metric: they identified emerging trends in the electorate and told a story we might not have seen coming without them. For example, Patrick Ruffini — a pollster at Echelon Insights who wrote a book about movement toward the GOP among nonwhite and younger voters in 2023 — thinks the polls did well by picking up those trends across the past few cycles:

“Speaking, at least from the Republican side, it did give you what you needed to go out and go design a campaign which is really obviously the main use of the polls… for the work we do and from a campaign perspective. Just in pointing… in the direction of the persuadable voters… looking very different, being more diverse, and being younger than they have been in the past. I think from that perspective, the polls were really good in… performing a core critical function.”

The polls also picked on a general dissatisfaction with the status quo, which Jackson saw a leading indicator of Donald Trump’s eventual win: “You look at the questions on the economy, on immigration, on top issues, on Biden’s approval rating,” she said. “And the inability to separate Harris from the current administration because she’s part of the current administration was really showing up in the data.”

But the polls were far from perfect. They underestimated Trump for the third time in a row after pollsters spent the cycle trying to avoid that exact result. Now, not every pollster had this problem. AtlasIntel — one of our highest-rated pollsters — pretty much nailed the results this year. But Atlas CEO Andrei Roman sees the repeated underestimation of Republicans as a problem for the industry. In his view, the bias toward Democrats “was perhaps not as bad as in previous cycles but… one of the things that helped pollsters with that was the fact that it was a tighter race so… maybe that was actually more luck than a methodological improvement.”

After an otherwise solid year, underestimating Trump again is an obvious blemish on pollsters’ report cards. Unsurprisingly, the issue came up with every pollster we spoke to for this article. Cohn thinks “these concerns will continue in some form or another until the polls either totally nail a high turnout presidential election or even underestimate the Democrats.”

So will we see big changes to the polls before 2026?

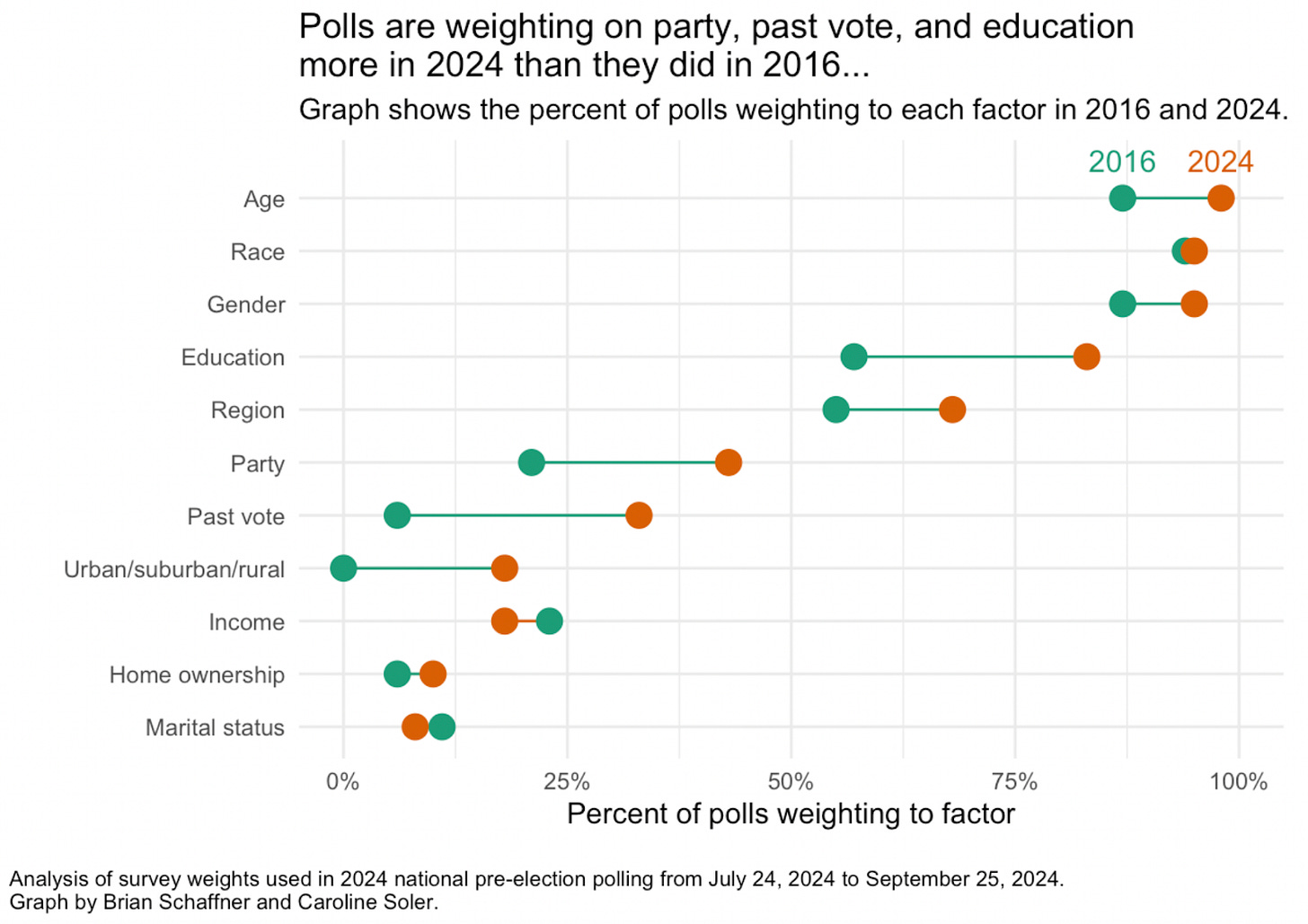

Probably not, in part because there’s not really a consensus as to why the polls were somewhat off. There are some cycles where there is a discrete change in how polls are conducted after a big miss. In 2016, most polls included too many college graduates, who are more likely to respond to surveys and also more likely to vote for Democrats. After the election, the proportion of polls weighting by education skyrocketed as pollsters tried to correct that imbalance and avoid underestimating Trump again.

But survey methodology usually evolves gradually. When (mystery) pollster Mark Blumenthal looked at the methodology statements from Pew Research Center polls over a 20-year period, he saw that “each successive two years they were adding things. They were weighting on more and more and more… and it’s not all happened in one giant move. These are all projects that have been evolving over time.”

Here’s an example of what that slow-burning change looks like. If you compare polls over the past quarter-century, there’s been a massive change in how polls are conducted — in particular, many polls are now conducted online when that wasn’t really a thing before. But there’s more stability cycle-to-cycle. And changes to the polls post-2024 are probably going to look closer to this slow evolution than to what we saw after 2016.

Why? Well, partly because the pollsters have already changed their ways — and the changes pollsters made in 2024, after a strong year for blue-chip pollsters in 2022, seemed to help at least a little. Cohn thinks pollsters are happy enough with this performance to sit back for a cycle and hold off on any major methodological overhauls:

“Because so much innovation has already happened over the last eight years, because so many pollsters… were probably more or less satisfied with what they produced in 2024, and because those methods the pollsters were using heading into 2024 reflected eight years of experimentation…. I don’t know that I would expect to see too many pollsters making vast changes between now and 2026.”

Some of the problem is perception — and we’d argue that’s not really the pollsters’ fault. Although headlines might tout a mere 1-point advantage in a poll as a candidate “leading,” that grossly overstates the precision of polls. In fact, it’s hard to necessarily expect error to be much lower than it was in 2024. As Blumenthal put it: “If all our measures are within a point-and-a-half of the thing we're trying to estimate, we’re doing pretty well considering that we’re not effectively acquiring random samples anymore.”

It’s also unclear whether the Republican underestimation problem will show up in the midterms. The 2018 and 2022 polls performed well on this front, with little overall bias. And according to Jackson and Cohn, because Republicans haven’t underperformed their recent midterm polls, pollsters might not be as worried going into 2026 — especially because it's a midterm where the Democrats are expected to fare well. As Jackson told us:

“When Trump is on the ballot — 2016, 2020, 2024 — we underestimate[d] him to varying degrees. In 2018 and 2022, polls [did] a pretty decent job of telling us [what was] going to happen with the congressional races. The hypothesis is that there's something unique to Trump being on the ballot, and that he draws out voters that are not going to come out in a midterm year, and possibly not for a different presidential candidate.”

So if this effect is Trump-specific, there’s no reason to assume that polls will underestimate Republicans once Trump is off the ballot, even in 2028. But — unsurprisingly — there’s a complication: pollsters disagree on whether or not their issue reaching Republicans is driven mainly by Trump. For example, Cohn doesn’t see it as a Trump-only issue:

“I think there are a lot of reasons to believe that the polls have always struggled to reach less educated voters and people who don't turn out. I think what's unique about Trump is that he fares so well among voters who don't have a college degree, who don't have a reliable track record of voting in elections… [But] the evidence of these problems predates Trump. Surveys taken in the 2000s, even before telephone response rates plummeted, showed way too many college graduates in the unweighted data… These problems have been around for a long time, and I don't expect that the Democrats are going to suddenly come back to parity among these kinds of voters on the periphery of the electorate.”

Ruffini also isn’t convinced that the underestimation is unique to Trump. He thinks we’ll know more after seeing how Republican Senate candidates do in 2026:

“It feels like there's kind of a growing body of evidence that, more often than not in Senate races, you're seeing the polls underestimate the Republican candidate. Which would tend to argue, particularly if that gets replicated more in a midterm environment, that [this] is not necessarily a Trump specific issue, that… it may be a Republican, Democrat issue. If it's a problem of… partisan nonresponse, that's going to show up for every… Republican on the ballot.”

Jackson leans more toward the Republican underestimation problem being Trump-specific, but with the caveat that it will depend on whether the next Republican presidential nominee energizes the same kinds of low-propensity voters that Trump does:

“I'm inclined to think it's a Trump effect, but I think whether it continues depends on who comes after Trump.… So let's say in 2028, JD Vance is the Republican nominee. Does he have the same appeal… does he just inherit the Trump… empire and the Trump following? Or… do Republicans swing in a different direction, and… Nikki Haley becomes the nominee? Does someone like her appeal to establishment Republicans and… pull [us] back to more of a 2012 type of scenario?”

There’s no longer a polling gold standard

The final reason we won’t see much change? There’s no consensus on what’s driving the Republican underestimation problem. Pollsters clearly struggle to reach low-propensity voters, but there isn’t a silver bullet they can use to fix this problem. Maybe they just haven’t found it (or can’t agree on it) yet. Or maybe — more ominously for the industry — it’s just inherently a very hard problem to fix, as Ruffini suggested:

“It's a little bit of a game of Whac-A-Mole to… find and stamp out any kind of tendency for your survey to be comprised exclusively of people who are just very highly politically interested, and… there's not just one variable that's going to control for that. And… maybe there is no variable that can definitively control for it.”

One potential fix that got lots of attention in 2024 was weighting polls based on recalled vote — meaning how people say they voted in the last presidential election. Some pollsters swear by this technique, while others are less convinced.

Cohn (who does not weight the NYT/Siena polls based on recalled vote) found that weighting to recall would have made polls less accurate in every presidential election since 2004. In 2024, it looks like the method helped pollsters in most states. But Cohn thinks it “pretty clearly struggled to account for changes in the makeup of the electorate.” So while the toplines might have been better, the crosstabs might have been worse — and polls aren’t just designed to predict results but to explain why changes are occurring.

Even putting aside whether the method will improve polls going forward, there’s also a potential ceiling on improvement. Remember that big change in how polls were weighted after 2016? Weighting to recalled vote was part of it. And it’s now become even more common, said Cohn:

“It's worth noting, most pollsters already were weighting on recalled vote by my count. So… there’s not necessarily a ton of room for more pollsters to embrace the technique. Those that weren't doing so, as far as I could tell, we're doing so on relatively firmly-held empirical [or] quasi-philosophical grounds that… will be difficult to overcome.”

This logic extends beyond recalled vote to pretty much every weighting technique. As the polling industry has moved away from random probability sampling, it has also moved away from a single unified standard of how to conduct a poll. Blumenthal explained that a few decades ago, there was a real consensus about the “gold standard” way to poll: random sampling using a technique like random digit dialing (RDD). Most households had at least one working telephone, most people answered their phone, and these polls gave you truly random samples with high response rates; almost no weighting was necessary. Said Blumenthal:

“As I was sort of taught, and as pollsters of my generation believed, we could look to our data as a source of truth if it were collected via probability sample. So the things that you did to make a poll high-quality were you spent money trying to keep the selection probabilistic at every stage. And you did all sorts of things to standardize your methods… so that you did not mess with the random probability sample…. Almost all of [the media polls] were RDD ten or more years ago, using more or less the same methods.”

Jump forward to today, and things look entirely different. And pollsters have little choice but to weight their data.

“You have different surveys done for different reasons, but all of them are getting, at the end, clearly nonrepresentative raw data that has to be weighted to be representative,” Blumenthal said. “The distinction, in my mind, between high-quality and low-quality [polls] is the rigor of the weighting methods.”

OK then, so what do rigorous weighing methods entail? Well, that largely depends on how you take your sample. And pollsters are anything but uniform when it comes to their sampling strategies. The NYT/Siena poll samples from voter registration files, for instance, while YouGov uses nonprobability sampling from their online panel, and CNN uses a probability panel — where people are recruited using a probability sample but then sampled for subsequent surveys using nonprobability methods.

So what could change?

The polls in 2026 won’t look identical to the 2024 polls. Despite the ceiling effect, we’ll probably see even fewer polls that don’t control for the partisanship of their sample — whether by weighting on recalled vote, party registration, or some other method. Ann Selzer’s miss in Iowa gave pollsters even more evidence that the old-school, minimal-weighting approach just doesn’t work anymore.

Pollsters could also come up with more creative ways to implement these partisan controls. Cohn thinks pollsters might weight to recalled vote selectively, or weight on recall without weighting to the outcome of a single election:

“I think that pollsters might choose to weight on past vote across their prior three polls. I think that's the sort of thing that I could imagine if Ann Selzer had chosen to continue to poll… that she could have used recall vote to prevent the extraordinary outlier from her final survey without committing to the idea that the correct target for her polls would be just to weight to the recalled vote from the last election”

Jackson suggested weighting specific demographic subgroups (white voters, women, etc.) based on past vote instead of applying the weight to the entire sample:

“Quite often what would happen is… black voters would show up generally correctly, but then white voters were skewing too Democratic…. And it was rare in the data that I worked with… for all subgroups to be equally off on recall. Usually there was one particular subgroup or a set of subgroups, where the data came in skewed.”

Roman gave some broader suggestions for how pollsters could — or should — change their methods based on the success of AtlasIntel in the last two presidential cycles. One of his recommendations was shifting even more focus toward online recruitment and sampling:

“Why I think we did so well, and perhaps based on that, what other pollsters can incorporate: our society has very much moved toward doing everything digital. So if you do everything digital perhaps you should run polls in a more modern digital way. If you’re always on these apps… it’s kind of weird to answer a phone call and think that will be a good recruitment method for a presidential election. The focus on digital is here to stay.”

Better turnout modeling could also help, even though it’s as much an art as a science. According to Roman, having a good likely voter model and accurately predicting turnout differences among Democrats and Republicans is one of the reasons AtlasIntel’s polls performed so well this year:

“One thing that really helped in this US election… is I think our modeling of turnout was very good. It… validated our view that there was going to be a differential advantage for Trump when it comes to turnout. So that hypothesis… is one of the key… reasons for which we did better than most this cycle. Based on that modeling of turnout, you have to have quite an open mind in terms of how you use weights…. It makes sense to weight on vote recall if you think turnout will be more or less the same… between Democrats and Republicans. If one side is more engaged and will turn out more… it may be the case that those weights will not help you as much.”

Finally, there’s a broader question of how dynamic to be with one’s weighting. Should pollsters set up a weighting scheme ahead of time and stick to it, or adjust their weighting strategy based on how their sample composition changes over the course of the election? There’s a tradeoff here. Public-facing polls usually try to stick to a predetermined weighting scheme for purposes of transparency. Private polling firms have a bit more leeway and can be more flexible, Jackson explained:

“Big media polls, they do things designed to be transparent. They set their weighting scheme up, and they stick to it…. [Private pollsters] are going… to make adjustments, to correct for things we think are going wrong, and then that's a big difference. And you know, when you don't have to explain to the public everything you're doing and you're answering to a client, that's a lot easier to do.”

AtlasIntel also takes a more dynamic approach to sampling and weighting, which Roman sees as another reason for their success:

“There is an interaction between sampling and weighting, and so you… have to look at both at the same time to work out something that’s really good…. We observe the data we collect. Because we collect so much data, because we do everything online, it makes it easier for us to understand patterns and tendencies when they change. That gives us an edge when it comes to incorporating final adjustments or final changes in weighting. For example, people across an election cycle will become more excited about an election at the very end. Or maybe the supporters of one candidate will become more engaged at the end, or maybe they’ll become frightened that the [other candidate] has a better chance of winning so they’ll start answering polls more. So it’s quite important to understand response rates, and based on that analysis, incorporate changes in how you figure out your weights.”

What about herding?

We wrote about this problem before the election, but for those unfamiliar, herding refers to pollsters not publishing results that differ from the consensus or tweaking their likely voter models until they do. So while herding is partly a methodological question, pollsters’ incentives matter, too.

For example, will the fact that Donald Trump is suing Ann Selzer over her final Iowa poll make pollsters even more nervous about releasing outliers? Almost certainly. Especially if the lawsuit isn’t dismissed quickly.

But at the same time, the increased emphasis on weighting and modeling can make herding harder to identify. When pollsters weight on things that are correlated with future vote choice — like past vote choice — you’d expect less variance than you would with a truly random unweighted sample. Blumenthal sees the fact that most pollsters use these techniques as the main reason we’re seeing less variation in the polls:

“In most cases… for the name-brand pollsters that we look at… it’s not really their judgment about who’s ahead and who’s behind. It’s their judgment about… how they design their surveys…. If they’re weighting on past vote and they’re weighting on party — or some combination of the two — they're going to be a lot more consistent on the thing they're correlated with, by design… so all these measures end up being more consistent [from] survey to survey. That’s not herding. I think that’s a big reason why you see less variation in some of these aggregates.”

But Roman does see herding as an ongoing concern. Why? Because for most pollsters, there are serious commercial consequences to getting an election wrong.

“Maybe in a tighter race, you have two incentives to herd. You… herd to the average and you herd to a place where you don’t have to say who’s going to win…. Of course it’s hard to stay outside of the consensus, because it’s very reputationally challenging to be wrong. It’s easier to be wrong with everyone else and to say “let’s analyze what the industry did wrong” than to be wrong on your own… and you’re stigmatized as a really poor quality pollster…. Our business is very much a reputation-driven business. If your reputation takes a huge hit, then you as a professional, and even worse, the company you represent, will not do well for a considerable time.”

But counterintuitively, Ruffini thinks there might also be an incentive for some pollsters to release weird and potentially wrong outliers. It’s a high-variance, high-payoff move. Most of the time you’ll be wrong and look bad, but you could end up seeming like a genius if things swing your way.

“I think that there are probably fewer polls that are going to not weight on education in the future, but you're still going to have polls that do that, and those polls are going to get discussed. Because what's going to happen is those polls are going to show outlier results, and outlier results get headlines…. Obviously, you could be wrong after the election, or you could be very right…. I think in some cases people are incentivized to publish those high-variance polls that move around a lot. Because those tend to generate more headlines.”

This is coming from a place of total ignorance, but has there been any effort by the polling industry to engage iPhone and Android teams to improve caller ID practices? Most don’t pick up calls from unknown numbers, but perhaps there would be a slight uptick if the call was marked “Survey” in some way? Have already started to see this with calls from doctor’s offices being marked as “Healthcare”, assume it could at least help response rates incrementally?

Nice job, Eli! Your writing's getting better. Not in a "your writing was bad" way, just.. it takes practice, and that practice is showing!

I deeply want to know the current demographic correlates on positive response to random digit dial sampling.

Hypothesis: landline-owning, caller-ID-not-using grandmothers who wish their grandkids would just, please, for once pick up the phone and give nana a ring. You don't even have to send a handwritten thank-you for that scarf she knit, just give her a call!

This article also suggests that pollsters should be a serious lobby for getting telemarketer scam calls under wraps. It's even incentive compatible for a politician trying to figure out what campaign to run! Know who the pollsters should send as their lobbyist? Ann Selzer.

PS not a dig on Selzer. Sometimes you swing and you whiff, but now small pollsters are just going to be more risk averse. Makes it hard to have an independent pollster that's not backed by a big institution (e.g The New York Times).